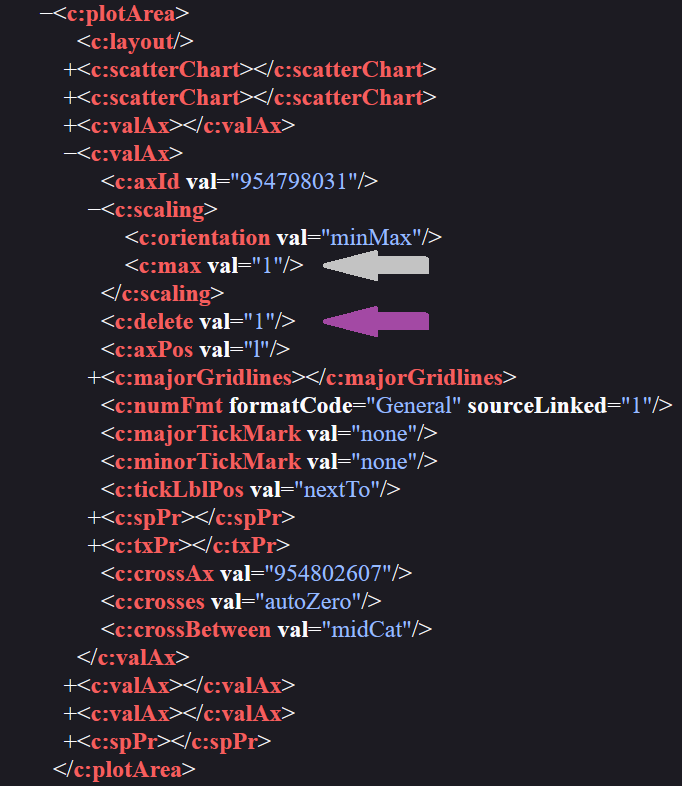

I collected the following data (on a system with 16GB of RAM and an Intel Core i5-9600K CPU @ 3.70GHz) to understand how Excel's non-response time and its working set changed according to the number of grid lines, setting, as in the previous examples, the maximum value of the PVVA to 1 (<c:max val="1"/>):

| Grid lines number |

Working set |

Non-response time |

Prolonged DoS |

| 1 000 000 |

1.2 GB |

∼1 seconds |

NO |

| 5 000 000 |

5.1 GB |

∼6 seconds |

NO |

| 5 500 000 |

5.25 GB |

∼6.5 seconds |

NO |

| 6 500 000 |

7.4 GB |

∼9 seconds |

NO |

| 7 000 000 |

8.82 GB |

∼11.5 seconds |

NO |

| 10 000 000 |

10.95 GB |

∼17 seconds |

NO |

| UNKNOWN THRESHOLD |

EXHAUSTED |

∞ |

YES |

| 99 999 999 999 |

EXHAUSTED |

∞ |

YES |

I don't know if there is an exact number of grid lines (UNKNOWN THRESHOLD) beyond which, regardless of PC hardware performance, Excel will always end up in an infinite loop and will never be able to draw them all. Surely, however, in traditional domestic PCs of 2023, it's possible to make it draw a sufficiently high number of grid lines to bring the system into a very prolonged DoS condition.

We were finally able to reproduce the Excel's vulnerable condition from scratch and exploit it ✅

In a nutshell:

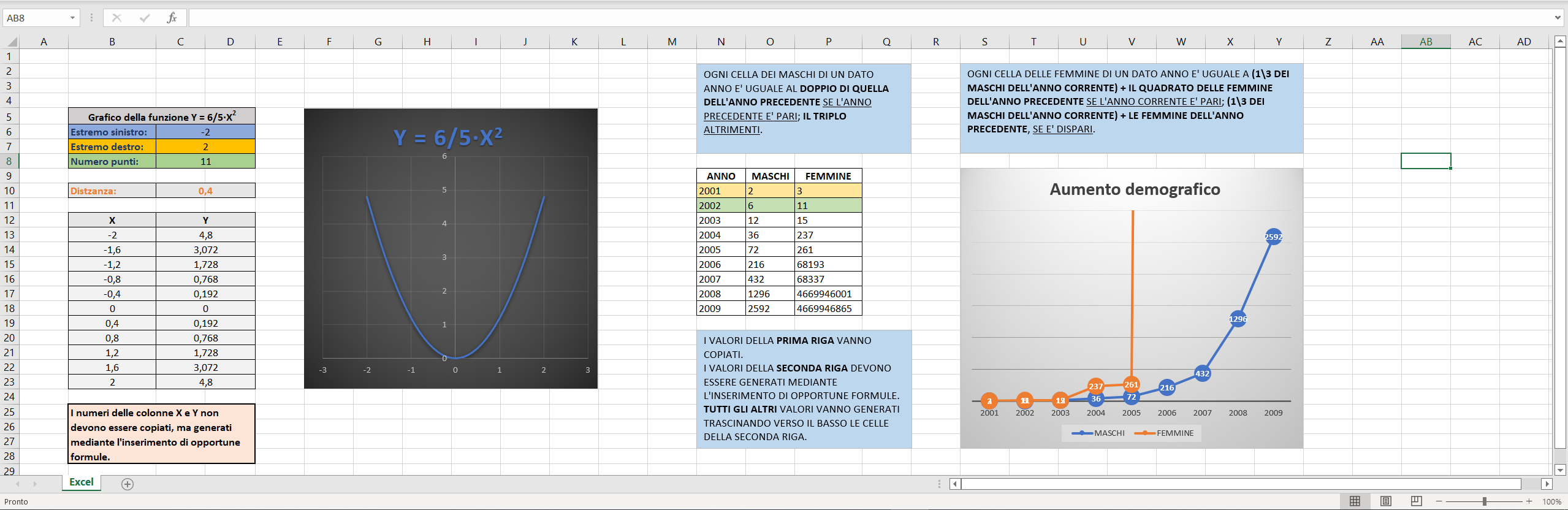

-

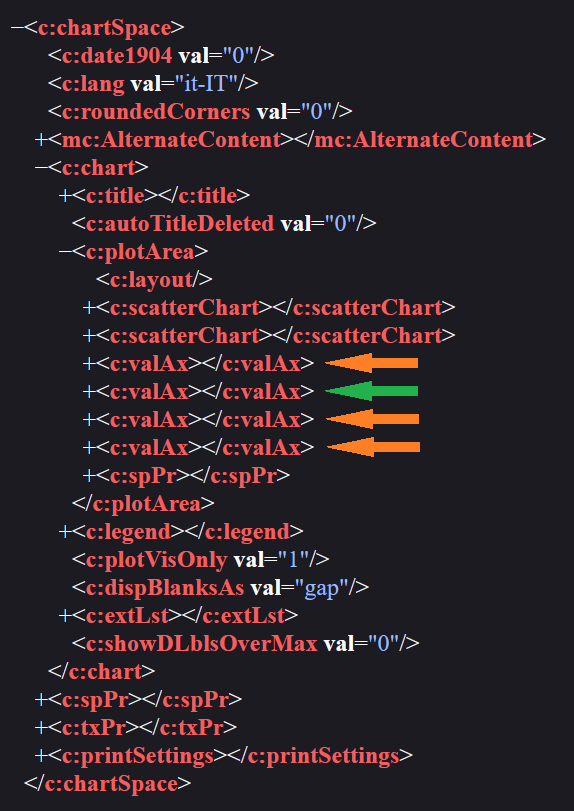

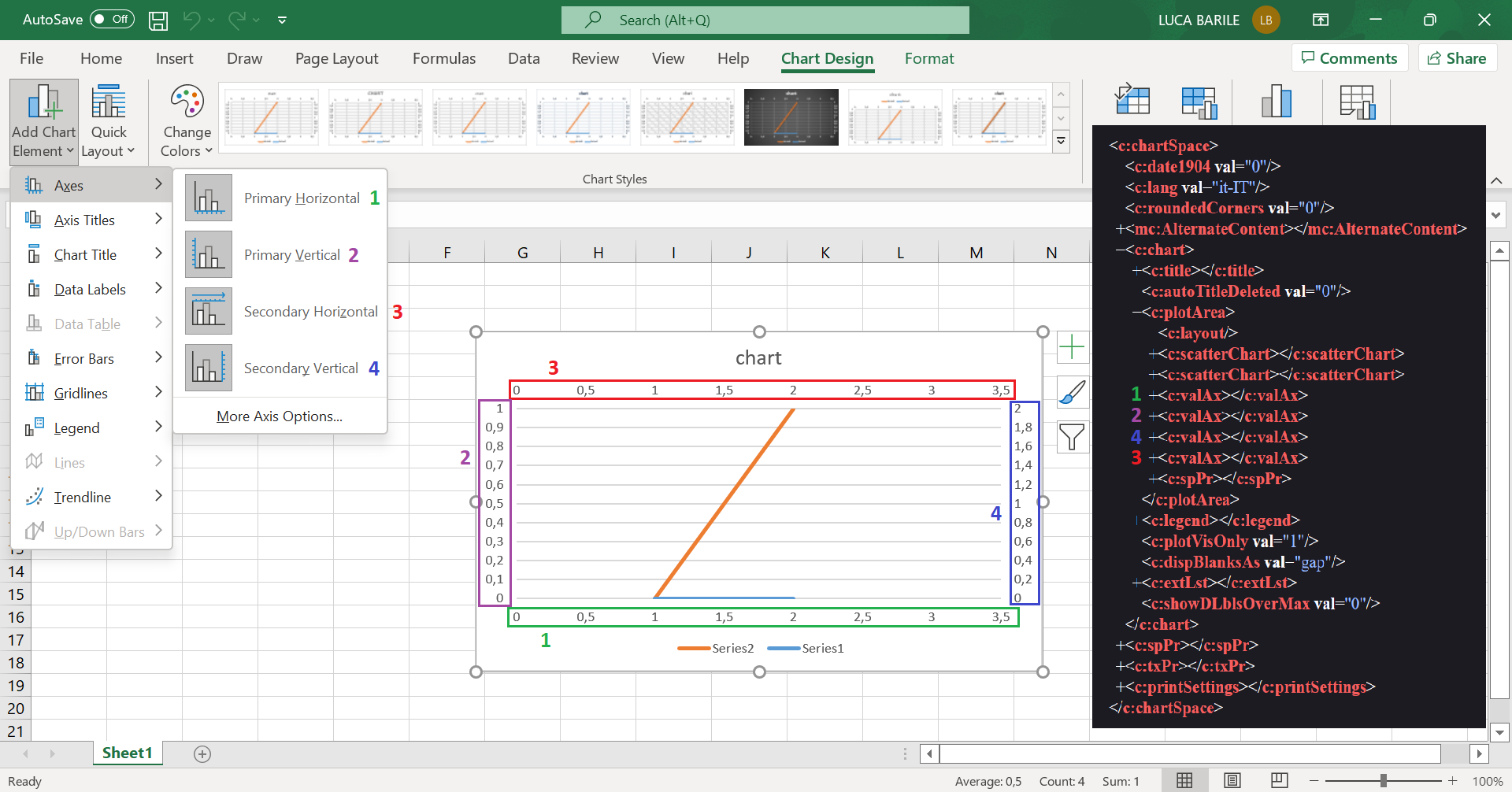

The vulnerability lies in the way Excel handles the deletion of the PVVA (in which the maximum value has been set) of a chart in which the SVVA (in which the maximum value hasn't been set) is visible. In this case Excel deletes the PVVA but continues to use its maximum value to decide how many horizontal grid lines to draw in the chart, even if the SVVA has no maximum value set. This leads Excel to draw more grid lines than it should (within a limited space) if the scale of the SVVA increases during the chart building process.

-

The vulnerability can be exploited to cause a DoS condition on the system by forcing Excel to greatly increase the scale of the SVVA. To do this just set specific chart data so that the chart lines greatly exceed the maximum value of the SVVA; in this way Excel is forced to change its scale to represent them.

While the DoS problem is triggered by the representation of a very large number of grid lines within a limited space, I suppose it can be split into at least two sub-problems:

-

The mere operation of drawing a grid line which, repeated many times (99 billion in the case of the exploit just described), could require a lot of RAM and time.

-

All the operations necessary to be able to draw the grid lines in a limited space, including the calculation of the distance that must exist between one line and another.

I suppose that point 2 could be even more problematic because, as we've seen here, Excel is coded to draw the grid lines so that they're equidistant from each other. This means that by increasing their number in a limited space, their distance decreases very quickly, forcing Excel to perform calculations and operations with very small decimal numbers.

Let's continue to consider the previous animation in which Excel, due to its vulnerability, for each additional SVVA unit adds 10 grid lines (I don’t consider the first grid line to simplify calculations) to the already existing ones, and look at how their distance changes (knowing that they must be drawn equidistant from each other) as a function of the increase in units of the SVVA.

If we assume that the height h of the chart is 1, the thickness of the lines is negligible and we suppose to add the new lines between the first and last line to simplify calculations then, to draw n equidistant grid lines we must distance them by a distance d equal to h\(n+1) from each other.

Since in our case Excel adds 10 grid lines for each SVVA additional unit, we get the following values:

| SVVA units |

Grid lines number (n) |

Grid lines distance (d) |

| 1 |

10 |

0.090909090 |

| 10 |

100 |

0,009900990 |

| 100 |

1000 |

0,000999000 |

| 1000 |

10 000 |

0,000099990 |

| 10 000 |

100 000 |

0,000009999 |

| 100 000 |

1 000 000 |

0.000000999 |

| 1 000 000 |

10 000 000 |

0.000000099 |

| ... |

... |

... |

| 99 000 000 |

990 000 000 |

0,000000001 |

We can see how, by greatly increasing the number of grid lines, their distance will asymptotically converge to zero without ever reaching it, and a decimal overflow

could occur in the decimal variable which stores their distance value (if it's stored as a mantissa and an exponent) because it could no longer be represented by the exponent portion of the decimal representation.

This

could lead to some memory corruption issue or infinite loop... But this is just an assumption, and I didn't investigate it any further.